The Apprenticeship Conundrum Part 2 of?: Can We Build It? Yes We Can!

The current and future skills required for junior tenured colleagues

The Apprenticeship Conundrum Part 2: Can We Build It? Yes We Can!

Jay Scanlan

Experienced PE executive, advisor, and operating professional

June 24, 2025

In Mary Shelley’s Frankenstein, the monster is not evil by design – it is abandoned, misunderstood, and untrained. In Part 1 of this series, I argued that we are at a similar inflection point in the enterprise services world. We’ve built the machine – GenAI – but we haven’t yet built the systems, mindsets, or skills to wield it wisely. Now, in Part 2, I want to test a hypothesis: we must move services professionals from doers to builders.

This isn’t just a semantic shift. It’s a redefinition of value, identity, and career progression in consulting, legal, executive search, technology, and other high-cognitive-content fields. It demands a new apprenticeship model – one that doesn’t just teach how to do the work, but how to design the work. Below, I’ll expand on this idea with research and perspectives from academia, industry, and government, and outline a framework for the skills needed in the GenAI era.

From Doers to Builders: Rethinking Apprenticeship in the GenAI Era

The core idea is that the “doing” in many service jobs is being automated or augmented, shifting the human edge upstream to designing and orchestrating work. Traditionally, junior professionals learned by doing – executing tasks, grinding through analyses, and making slide decks – gradually earning the right to shape the approach. But GenAI is collapsing that ladder. Routine execution is increasingly handled by AI, from drafting documents to analyzing data. What’s left – and growing in importance – is the architecture of the work: devising the right questions, shaping AI prompts and workflows, applying judgment to AI outputs, and integrating multiple tools and people into solutions. In other words, moving from task performer to solution builder.

This shift is happening for a reason. AI “co-pilots” can now take on repetitive and labor-intensive tasks, from writing boilerplate text to analyzing large datasets in seconds. As AI pioneer Kai-Fu Lee notes, “AI will increasingly replace repetitive jobs, not just blue-collar work but a lot of white-collar work. … That’s a good thing because what humans are good at is being creative, being strategic, and asking questions that don’t have answers.”[1] The value we bring is moving to those very human capabilities – creativity, strategy, complex problem-solving – which cannot be automated. It echoes a question posed in a recent Atlantic commentary: “Why should humans do anything, if machines can do it better?”[2] The answer lies in focusing on what machines cannot do alone – the imaginative, empathetic, and ethical dimensions of work that require human judgment.

In my conversations with enterprise services clients, I generally lay out four transformational impacts of GenAI on these firms – from ways of working (how core tasks are executed) to domain solutions (how we deliver client impact), business models (how we capture value), and crucially, skills & capabilities (how the human edge moves upstream)[3].

This last point is the crux: if we don’t evolve our apprenticeship and training models, we risk creating a generation of professionals who are neither doing nor building – just watching. Early-career consultants and lawyers could end up sitting idle, overseeing an AI that does the work, but without the know-how to add value beyond what the AI can do. That scenario is a recipe for stagnation and lost talent.

External data and industry sentiment strongly reinforce this concern. A recent survey noted that after companies raced to implement GenAI, employees were 'expected to adapt almost overnight, learning new skills and a new way of working'[4]. Yet many firms haven’t put in place the training or structures to support that. Over 78% of organizations are now using GenAI in at least one business function, but more than 80% don’t see a tangible impact on the bottom line[4]. Why? Because people need new skills and models to harness the tech. In fact, there is a 'massive visibility gap' between C-suite ambition and employee readiness: while 79% of executives are confident in meeting AI transformation goals, only 28% of employees feel adequately trained, and just 25% say they can use AI effectively in their work[4]. This points to a huge apprenticeship and upskilling challenge. As another analysis quipped, some executives seemed to think just adding AI would be a silver bullet, forgetting that 'AI’s promise won’t be realized through technology alone; it takes people.'[4]

Meanwhile, clients and markets are starting to expect this evolution. In one anecdote, a consulting partner noted some clients question paying for junior staff if those juniors are just doing tasks an AI could handle: 'We’ve had clients say, "We’re not prepared to pay for junior staff…can’t you do this with GenAI?”'[5]. In other words, if all a first- or second-year consultant is doing is cranking out slides or spreadsheets that an AI now can produce, the traditional apprenticeship model (where those junior colleagues learn by doing 'grunt work') not only loses value – it becomes a liability. Firms must respond by redeploying young professionals to higher-value activities sooner.

Conversely, those who embrace the builder ethos can gain an edge. Many business leaders are echoing the mantra that “AI won’t replace you – but someone using AI will.” In fact, Rob Thomas, IBM Senior VP, put it bluntly: 'AI is not going to replace managers, but managers who use AI will replace those who do not.'[1] Organisations that reskill their people into 'AI-oriented builders' will outcompete those that don’t. Recognizing this, two-thirds of employers plan to hire for AI-related skills, and half of companies expect to fundamentally reorient their business around AI integration[6]. At the same time, 40% of employers anticipate reducing roles in areas where automation can take over[6], meaning the “pure doer” jobs are on the chopping block. All signs point to one conclusion: the world is moving. The question is: are we?

Notably, academics and analysts are actively evaluating this shift. Wharton professor Ethan Mollick has written extensively about how GenAI shifts the value from execution to orchestration in organizations. In his Substack (“One Useful Thing”) and other writings, he argues that the future belongs to those who can manage AI – not just use it casually. This means developing people who know how to delegate to AI agents, critique their output, and knit together human+AI workflows. In a recent MIT Sloan article, Mollick even suggests that traditional org structures will be upended and that companies need to “build new capabilities, and fundamentally new ways of thinking about work,” starting with a mindset shift[7]. In other words, transitioning your workforce from doers to builders isn’t just an operational tweak – it’s part of a larger transformation of how we define roles and collaboration in the age of AI.

Voices from the business press underscore the urgency. A Forbes Tech Council piece pointed out that employees have been “expected to adapt almost overnight” to AI-driven workflows[4], and that organizations must now rethink how they train and deploy talent. Another report on the consulting industry noted that 56% of consultants are saving hours per day thanks to AI, allowing them to reinvest time in higher-value activities[8] – but only if they’ve been trained to do so. The same report emphasized this is “not just incremental efficiency. It’s a structural shift in how value is created and delivered,” urging firms to redesign their delivery models to capitalize on it[8].

Even government and public institutions recognize the need for a skills shift. The World Economic Forum’s Future of Jobs 2025 report estimates 44% of workers’ core skills will change by 2027, and highlights a surge in demand for skills like analytical thinking, creative thinking, and AI literacy[9][9]. Governments are responding: for example, the U.S. Congress introduced the bipartisan AI Training Expansion Act of 2025 to ensure public sector employees are trained to use AI effectively at all levels[10][10]. The message is clear across sectors – we need to rapidly equip our workforce with a new mix of skills.

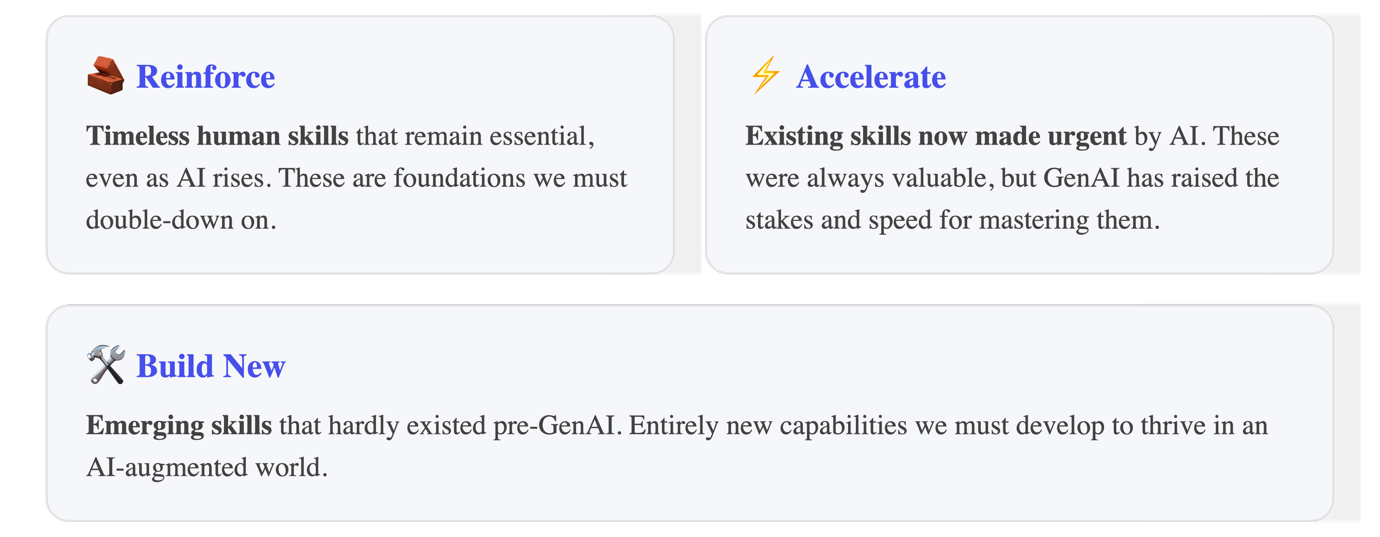

So, what are those critical skills? How do we reinforce the timeless fundamentals, accelerate the development of skills that have become newly urgent, and build new capabilities that didn’t exist a few years ago? To help answer that, I’ve been using a simple but powerful framework:

A Framework for Skills in the GenAI Era

I categorize the evolving skill set into three groups: Reinforce, Accelerate, Build New. These insights have been validated by conversations with former colleagues, clients, and industry research. Here’s the breakdown:

And here are the specific skills in each category:

Let’s unpack each of these briefly:

Reinforce: Timeless Human Skills

These are the classic capabilities that have always defined effective professionals – and will continue to differentiate us in an AI world. In fact, because AI handles the rote parts, these human skills become even more prominent. The World Economic Forum (WEF) specifically notes that while machines will automate many tasks, jobs requiring human skills like creativity, problem-solving, communication, and empathy will continue to grow in demand[9]. These are the skills that 'radically differentiate us from AI' [9].

Structured Thinking & Problem Solving: The ability to break ambiguous problems into components, analyse them, and recombine insights into a solution. GenAI can generate answers, but it takes a human to formulate the right problem and interpret the answers in context. We need to train junior colleagues to not just feed data to AI, but to ask “What is the real question we’re trying to answer for the client?” and to do first-principles thinking around that. This skill is reinforced through classic apprenticeship (case team problem-solving, etc.), and it remains a bedrock. In the GenAI era, structured thinking also means critical thinking about AI outputs (e.g. checking whether an AI-generated analysis actually makes sense).

Client (or Stakeholder) Empathy: Understanding needs, motivations, and pain points at a deep level. This goes beyond what’s written in a project brief. It’s the knack for reading between the lines and hearing the unsaid. Empathy guides us to ask AI the right questions (you can’t prompt effectively if you don’t grasp the true need), and it’s critical in how we present solutions. AI cannot (at least currently) replicate genuine human empathy or build trust the way a person can. As chess grandmaster and technology philosopher Garry Kasparov puts it, 'True collaboration is not about dividing work between machines and people but about bringing the strengths of both together'[1]. Empathy is a human strength we must keep at the forefront.

Communication: The ability to explain, persuade, and tell a story with data and insights. This includes written communication, visual presentation, and verbal storytelling. In the past, junior-tenured professionals developed this by writing memos, making slide decks, creating advertising pitches, etc. Now AI can draft a slide or email, but humans must still ensure the narrative is coherent, contextual, and compelling. Moreover, professionals need to communicate about AI itself – explaining AI-driven insights or processes to clients and colleagues who may not be tech-savvy. Every professional needs a bit of the educator’s skill set now, to demystify and justify what the AI is doing. Communication is so vital that even as advanced tech spreads, employers are putting greater emphasis on it; clear communication and teamwork are repeatedly cited among top skills needed by 2027[9].

Accelerate: We are all managers now!

These skills were always valuable in enterprise services, but GenAI has turned them from optional advantages to core requirements. From their first day, new colleagues are now managers. Unlike in the past, where junior lawyers or consultants would first be ‘solo contributors’ for a few years before taking on real managerial responsibility, they now will convene a small army of digital agents, tools, and other semi-autonomous digital operators.

Firms need to accelerate how they develop this managerial capacity in their people, because they directly affect whether we can leverage AI effectively:

Target Operating Model & Workflow Design: Any professional in a transformational role (and in this new era, that’s pretty much all of us) needs to understand that they must change the Target Operating Model design of their client or their own firm. Target Operating Model transformation (I like the 5 part model of People & Skills, Processes Journey & Ways of Working, Structures Incentives & Governance, Data & Analytics, Technology & Systems) is essential to driving real Return on Investment (ROI) from these new capabilities. [In a later post, I’m going to talk about ‘Tools, Tools, Tools, and not a drop of ROI in sight!’) Then, all professionals need to make the target operating model change tangible. Workflow design is the skill of mapping out how work gets done – the series of steps, decisions, and hand-offs (whether to people or to tools) required for a process. In consulting, we may think of it as engagement management or process mapping. Why is it urgent now? Because with AI tools in the mix, the optimal workflow is very different. For example, a legal team might redesign the contract review process to have an AI model do the first pass, then paralegals verify. Someone has to intentionally create that new workflow. If juniors only know how to follow the old process (“read every line manually”), they won’t naturally insert the AI where it adds value. So we need to teach people, early on, to be mini-process engineers. Companies that excel at this – essentially, dynamic process re-engineering – will capture huge efficiency and value. We see this at many leading firms already today: e.g., PwC teams are tasked with identifying processes where AI can be inserted to capture enterprise-wide productivity gains, not just letting individuals tinker in isolation[5] .

Knowledge Management: Enterprise services run on knowledge – past proposals, research, templates, code snippets, etc. Historically, firms struggled to get professionals to document and reuse knowledge (“knowledge capture” often took a backseat to just doing the next project). Now, however, effective use of GenAI often depends on having good knowledge bases. For instance, a consulting firm that has curated datasets and past case studies can feed them to an AI assistant, making it far more powerful for consultants’ day-to-day work. So the skill here is twofold: (1) organizing and curating knowledge assets (so that AI and people can find and use them), and (2) using AI tools to access knowledge quickly (e.g. knowing how to query an enterprise search or a semantic archive). This is “urgent” because if we let chaos reign – everyone working in silos with no shared knowledge – we fail to get leverage from AI. On the flip side, companies that train people to diligently contribute to shared libraries and to use AI to mine those libraries will see dramatic improvements. A McKinsey article recently noted that a lack of structured adoption (including knowledge governance) is a key reason many firms aren’t yet seeing GenAI pay off[4]. Knowledge management often isn’t sexy, but in the AI era it’s a critical backbone for scale.

Cross-Functional Fluency: This means being conversant across different domains – especially technology, data, and the “business” side. In the past, a young professional could maybe say things like “I do strategy, I’m not a tech person.” That won’t fly now. When an engagement involves developing an AI-driven solution, a consultant or lawyer might need to work with data scientists, or evaluate an AI vendor, or understand the basics of how an algorithm works. Being fluent enough in multiple disciplines to collaborate is now core. GenAI itself is a mash-up of domains (language, algorithms, subject-matter context); using it well requires understanding, for example, how an AI might err in a financial analysis due to lack of domain context. Those who can straddle fields – the lawyer who can talk Python, the consultant who understands UX design – will accelerate in their careers. Indeed, many firms are hiring non-traditional profiles (data scientists, engineers) into teams and expecting everyone to work together. This is why training programs must include at least introductory tech/data education for all (for example, PwC is even hiring data and product profiles and teaching all staff about LLMs and new tools[3]). Cross-functional fluency breaks down the silos between “the people who build the solution” and “the people who deliver it to the client” – because increasingly, those are the same people.

Build New: Frontier Skills for the AI Era

Finally, there are skills that are essentially new additions to our professional toolkit, born of the AI age. Ten years ago, none of these would appear in an enterprise professional skills model; today, they are among the hottest capabilities to acquire:

Prompt Engineering: The art and science of crafting prompts (the instructions or queries given to AI models) to elicit the best results. This has become so important that new roles called “Prompt Engineers” command high salaries in tech firms. As I have discussed in previous posts, many of the great skills learned in the humanities or the arts or social sciences can be turned into great prompt engineers. Indeed, this morning my thirteen year old daughter around the breakfast table immediately applied her playwriting skills about storyline, character objective, and conflict & resolution narrative to building great prompts. [Yes, our breakfast table conversation can be wide-ranging, and well, a little nerdy sometimes.] So for most professionals, this doesn’t mean literally becoming a prompt engineer by title, but it does mean learning how to interact with AI models in a sophisticated way. It’s a skill to be able to take a nebulous task (“analyze this dataset and tell me something useful”) and translate it into a precise prompt or series of prompts that guide the AI effectively as a manager would instruct a brilliant but sometimes context-less researcher, communications, or analytics colleague. It also involves techniques like prompt chaining (breaking tasks into multi-step interactions) and providing the AI with reference context. We should train junior staff on how to experiment with prompts systematically (e.g., prompt-engineering “parties” or prompting hacks or workshops have proven useful). PwC Canada has run group sessions where cross-functional teams collaborate to develop effective prompts for common tasks[5]. This turns prompt crafting into a shared, learnable skill. Ultimately, good prompt engineering is about knowing both the capability and the limitation of AI. And as many experts say, it’s part science (knowing how the model responds to certain phrasing) and part creativity (finding clever ways to get at the answer you need).

System Orchestration (AI Integration): Think of this as digital solution architecture, tailored to AI. It’s the ability to design a stack or system where multiple tools (AI models, software platforms, data sources, and people) all work together to achieve an outcome. For example, orchestrating an advisory solution that uses an AI tool to do analysis, a human expert to verify and add insights, and an automated dashboard to deliver results to the client. In traditional consulting, we might have left the technical integration to IT specialists or not considered it much at all. Now, even as a business consultant, you may need to sketch out how data flows from the client’s systems into an AI model, or how an AI-driven internal tool will fit into your team’s workflow. This skill overlaps with workflow design but operates at a higher “systems” level. It includes understanding both physical processes and APIs, knowing what off-the-shelf AI services or tools or plug-ins that already exist, and having a sense for software capabilities – or at least collaborating closely with those who do. Essentially, every professional is now a bit of a product manager. We have to build solutions, not just slide decks. That means deciding, for instance, that an engagement deliverable will be a working Power BI dashboard augmented by a GPT-4 analysis script and then actually coordinating the creation of that. Some firms are already making product and AI engineering skills part of their DNA (hiring software engineers, partnering with tech providers, etc.)[3]. Professionals who can bridge the gap between client problem and technical implementation (even at a conceptual design level) will lead the pack.

AI Judgment & Oversight: This is a more abstract skill, but arguably the most important new one. It’s the ability to exercise judgment in a human-AI collaborative context. Concretely, that means knowing when to trust the AI’s output, when to double-check it or ask a different question, and when to override it completely. AI can be extremely convincing and still wrong. It can also carry hidden biases or violate compliance rules if not monitored. Some LLMs have even reverted to an extreme sycophancy or reinforcement of dangerous or wrong-headed beliefs to ‘please’ the prompter. So, professionals need a strong sense of verification and validation. For example, if an AI summary of an earnings call seems off, do you have the skill to quickly sanity-check it against the source? If an AI tool suggests a recommendation to a client that might be sensitive (say, cutting jobs based on a trend), do you have the judgment to weigh ethical implications? This also covers understanding basic AI ethics and risk – recognizing things like data privacy concerns, intellectual property issues with AI-generated content, or the limitations of AI in understanding nuance. In essence, AI judgment is what prevents us from becoming rubber-stamp operators who just pass along whatever the machine says. Instead, we become effective “editors” of AI: we bring the human common sense, ethical lens, and big-picture perspective to ensure the final outcomes are sound. This skill will be built through experience (we will all learn from scenarios where the AI was wrong or problematic) and through training in topics like AI ethics, data literacy, and quality assurance.

It’s worth noting that these new skills don’t replace the old ones – they complement them. A junior lawyer with great AI prompt skills still needs rock-solid legal reasoning. An engineer who can orchestrate systems still needs domain knowledge. But these capabilities drastically expand what one person can do. It’s not unlike when spreadsheets became common – finance analysts who learned Excel early had a huge advantage over those doing calculations by hand, but they still needed to understand finance. Now, proficiency with AI is the new differentiator.

To repeat our new skills mantra together: Reinforce the timeless abilities that make us human (because they will differentiate even more as AI handles routine work), Accelerate the development of organisation, process, knowledge, and cross-functional skills (because these enable us to actually use AI effectively in teams), and Build New skills unique to this era (because entirely new modes of work demand entirely new expertise).

This framework isn’t just theoretical. We’re already seeing it play out in practice:

At firms like PwC, leaders talk about changing what they expect from juniors. Instead of loading them with only data grunt work, they are training them to supervise AI and focus on higher-order thinking. As Shelley Gilberg of PwC Canada put it, implementing GenAI is 'forcing us to stop making it about giving junior staff tasks.' The goal instead is to create a workforce 'capable of applying judgment, understanding risk factors, and providing meaningful oversight of AI-augmented work.'[5] In concrete terms, junior staff are being trained to review AI outputs and grasp the broader logic behind the work, rather than just toil on narrow tasks[5]. This is a perfect illustration of moving from doer to builder mindset at the entry level. Apparently, they even host hands-on learning sessions (like the prompt engineering parties mentioned) to build those new skills.

Multiple consulting firms are investing heavily in upskilling programs. We see internal 'AI academies' and certification programs, where consultants and advisors at all levels learn about these emerging tools. For instance, Deloitte and my former colleagues at Accenture have publicised their firm-wide GenAI training initiatives, aiming to get tens of thousands of employees fluent in things like using large language models in projects. This indicates that the skill shift isn’t left to chance. It’s being systematically addressed by leading employers. (One survey found 42% of management consultants have already received advanced training in GenAI – far higher than in other sectors[8], showing how seriously that industry is taking this.)

On the job, new roles are appearing. In some cases, teams now include an “AI Specialist” or “Automation Lead” even in non-technical projects, to ensure AI opportunities are seized. While not every project can afford a dedicated role, it signals that every team member may soon be expected to bring some AI-savvy to the table. And indeed, professional development plans are starting to include AI competencies. I’ve seen consulting performance rubrics that now explicitly mention “leverages AI tools to improve efficiency” as a sign of high performance for even first-year consultants.

What the World Is Saying: Key Themes on Skills & Careers

To ensure we’re not in an echo chamber, I looked at what academics, business leaders, and commentators are saying about career development in the GenAI era and the shift toward a builder mindset. A few key themes emerge:

1. AI Won't Replace Humans - But Humans with AI Will Replace Humans Without AI: Scholars like Karim Lakhani at Harvard Business School emphasize adaptation. Lakhani famously said, ‘AI won’t replace humans - but humans with AI will replace humans without AI.’ [11] This demonstrates the doer-to-builder imperative: professionals must augment themselves with AI (essentially become builders with AI as a tool), or risk being outperformed by those who do. Research at MIT, Wharton, and elsewhere also points to “complementary skills” being vital with things like creative thinking, interpersonal skills, and AI oversight [1] most crucial. This is prompting calls to update curricula in business and law schools to teach AI collaboration skills, not just traditional analysis.

2. Elimination of Mundane Tasks: Tech visionaries like Kai-Fu Lee and Fei-Fei Li often note that AI will handle the ‘mundane’ so humans can focus on higher pursuits. Fei-Fei Li envisions 'machines taking on tasks that are repetitive and mundane, freeing humans to focus on problem-solving, creativity, and empathy.' This directly reinforces our distinction: AI takes the “doing,” humans concentrate on “building” (creative problem-solving). Similarly, Demis Hassabis (CEO of DeepMind) has spoken about a future where AI enhances strategic thinking and again highlights that strategy (a builder task) becomes more crucial.

3. Talent Redeployment: Many CEOs and consultants are publicly commenting on AI’s impact on talent. For example, my former colleague Julie Sweet (CEO of Accenture) has discussed publicly 'rotating our people' from work that AI automates into work that AI enables. This effectively describing a doer-to-builder redeployment. Satya Nadella (CEO of Microsoft) often says every knowledge worker will have an AI “copilot” and that this will require new skills to work alongside AI. He has mentioned the importance of prompt literacy and judgment. Satya also noted that the composition of tech and non-tech skills in every role is shifting, and continuous learning is key. IBM’s leadership echoes the replacement message: use AI or be replaced.

4. Career Pathing: Deep-dive articles in publications like The Atlantic and The Economist are grappling with what AI means for careers. A recurring theme is that human work will need to emphasize what is uniquely human. For instance, a piece in The Atlantic argued that in an AI world, “good taste” and judgment become even more important. Effectively, humans become curators of AI output, deciding what is good. [12] Observers posit that while 'the machines can have our chores, we can’t afford to outsource creation,' urging that humans guard the creative, design-oriented work as “divine.” Similar commentary from Harvard Business Review, Forbes, and tech blogs converges on the idea that careers will shift towards people who can create, direct, and innovate with AI, rather than do repetitive tasks. Even government officials, such as the US Secretary of Labor, have publicly argued effectively: our workforce must be retrained for AI from the factory floor to the boardroom. She has highlighted career progression as critical national imperative, backing initiatives like funding AI-related apprenticeships and training programs.

Can We Build It? — Yes, We Can (With Intentional Action)

So how do we make this shift from doers to builders happen? It’s encouraging that the answer is not a mysterious one. It comes down to intentional changes in how we train, manage, and reward our people. Here are some steps and best practices that emerge from the research and examples:

I propose that enterprise services firms must:

1. Redesign Apprenticeship from Day 1: Firms need to revamp their new-hire training and early career experience. Instead of the old model where an analyst might spend a year formatting slides or a junior lawyer proofreads documents, incorporate builder tasks early. For example, teach new consultants how to use the firm’s GPT-based research assistant in their first week, and then assign them to contribute by finding insights with it for a project. Or, in law, include practical exercises on using AI for contract analysis in bar training programs. Some organizations are already doing this – integrating AI tool training into analyst bootcamps and new associate orientations. This sends a signal: using and improving AI-enabled processes is part of the job, not a niche tech sidebar.

2. Give Junior Colleagues Room to Design (with Support): It’s important to actively allocate parts of projects where junior staff get to design the approach, not just execute someone else’s plan. A manager or other senior leader might say, “You’re in charge of figuring out how we incorporate an AI sentiment analysis into this market research task” – an assignment that forces creative thinking and research, not just crunching data handed to them. Of course, managers should support and review, but by doing this, you build the muscle of solution design. The PwC “Generation Wave” initiative cited earlier is a good example – they have escalating levels of AI education and even hands-on building exercises for juniors[5]. That gives less experienced employees the confidence and context to act in builder roles. It’s also motivating young professionals feel like they are helping shape new methods, not just following orders.

3. Mentoring and Knowledge Sharing: Traditional apprenticeship thrived on mentoring with the senior partner teaching the junior tenured colleague through feedback on their work output. That still holds, but now more tenured leaders themselves might be learning AI. It can be a two-way street: a digitally native junior might help a senior use an AI tool, while the senior imparts domain wisdom. Companies can encourage the oft touted “reverse mentoring” intervention or set up communities of practice around these new skills. For example, a Slack channel where people share “prompt hacks” they discovered keeps collective learning going. Some firms have created internal forums for showcasing AI experiment results – effectively knowledge marketplaces where a consultant can demonstrate a Python tool or a prompt technique to the whole group. This builds a culture where being a builder is celebrated and propagated.

4. Infrastructure and Sandboxes: Providing the right tools and environments is crucial. If you want people to experiment with building solutions, they need access to AI platforms, datasets, and possibly low-code development tools. Forward-leaning organizations are offering “AI sandboxes” – secure, approved environments where consultants can play with company data and AI models without risking confidentiality. For instance, creating a dummy client data set and letting teams see what they can do with it using an LLM. The more friction we remove from trying new things, the faster people will learn by doing. And when someone builds a prototype (say an automated report generator), leadership should pay attention and see if it can be scaled or formally integrated. This encourages bottom-up innovation.

5. Leadership and Incentives: None of these stick unless leaders actively champion it. Mid-tenured professionals need to be rewarded for coaching their teams in new skills and driving this innovation for impact, not just for hitting traditional delivery metrics. Senior leaders should publicly recognize teams that try new “builder” approaches, even if the first attempt isn’t perfect. Culturally, it must be okay to spend some time learning and experimenting (which historically can be tough in high-utilization consulting environments). Some firms have begun to include technology adoption in performance reviews (PwC, for example, integrated AI adoption into their evaluation criteria to signal its importance[5]). When promotion decisions consider a consultant’s ability to innovate on delivery or to improve a process with AI, you very quickly get behaviour change. People follow their firm’s incentives.

6. Hiring Strategy: Over time, as we saw, many roles will evolve. Hiring should too. Firms should consider recruiting not just the usual profiles but also those with builder mindsets. For example, hiring MBA graduates who also did a Coursera course on AI, or bringing in a data scientist and training them in consulting ‘soft skills’. By infusing teams with a diversity of backgrounds, you inherently push everyone to pick up cross-functional fluency. Some companies partner with universities to create specialized internships or apprenticeships in AI for business – drawing in fresh talent already oriented toward this intersection. An EY study suggested that AI apprenticeships could be a promising route, mixing technical and on-the-job business training to produce the next generation of AI-fluent consultants.

In all these actions, the guiding principle is intentionality. None of this evolution will happen automatically. We have to design it. In that sense, our own organisations need builders just as much as our client solutions do. We have to “build” the new apprenticeship model itself.

Importantly, embracing the builder model is not just defensive (avoiding obsolescence); it’s hugely positive-sum. When junior professionals become more capable more quickly, they can take on meaningful work sooner, which both improves their engagement and adds more value to clients. It can help address talent shortages – instead of needing a large team of rote workers, a smaller team of AI-augmented builders can accomplish more. It can also create new services to sell – firms can go to market offering AI-enabled consulting solutions that they couldn’t before, because their people have the skills to deliver them. In short, this is a path to empowering our workforce, not displacing it.

So, let’s go back to where we started. Frankenstein’s monster wasn’t doomed by its strength; it was doomed by the lack of guidance and education provided by its creator. GenAI, the “monster” we’ve unleashed in professional work, is similarly powerful and neutral on its own. Whether it becomes a force for good (amplifying human ingenuity) or a source of chaos and lost opportunity depends on us. We can’t abandon our creation; we must train and guide it – and train and guide our people to master it.

Can we build it? Yes, we can. But only if we’re deliberate. We need to redesign apprenticeship to teach building, not just doing. We need to give our younger professionals the tools, context, and confidence to architect solutions – not just execute tasks. And we need to reward those who step up as builders in this new era.

In Part 3 of this series, I’ll explore what this looks like in practice on the ground – with case studies of teams and firms that have begun to operationalize the doer-to-builder shift. We’ll look at how engagement models, project staffing, and career paths are changing. Until then, I leave you with this thought:

In an age of powerful machines, the professionals and organizations who thrive will be those who reimagine themselves as creators and orchestrators. Let’s not make the mistake of leaving our “monsters” unguided. Instead, let’s apprentice a new generation of builders – a generation equipped to shape the future hand in hand with AI.

Frankenstein’s monster wasn’t evil by nature; it just needed a teacher. We have the opportunity now to be the teachers and the builders – to ensure our new AI tools augment a flourishing of human potential, rather than a wasteland of human skill. That is the heart of the apprenticeship conundrum, and I’m optimistic we can solve it together.

References

[1] AI and the Workforce: 24 Insightful Quotes About AI to Reshape Your ...

[2] Productivity Is a Drag. Work Is Divine. | AllSides

[4] How GenAI Has Transformed Work After Two And A Half Years - Forbes

[5] How to create a junior tech-agile workforce using GenAI in professional ...

[6] Is AI The Skill of Future? WEF Warns 39% of Current Job Skills Obsolete ...

[7] Agentic AI: Thoughts From Ethan Mollick On Future Of ... - Forbes

[8] AI in consulting: How AI will change the professional ... - LexisNexis

[9] The top soft skills to develop by 2027: "Future of Jobs", World ...

[10] Rep. Nancy Mace Reintroduces Legislation To Expand AI Training Across ...

[11] AI Won’t Replace Humans — But Humans With AI Will Replace Humans Without AI

[12] Artificial Intelligence - The Atlantic

Disclaimer: These views are my own and reflect no other organization. They are current today but likely to evolve rapidly as our world, markets, and technologies do. Comments are welcome but please be constructive and civil – we are all trying to work out answers to this new world together!

Nota Bene: A friend asked me if I write these posts or does an LLM! I write all the words you see above. I do ask an LLM to critique it for me, identify any grammar errors, and fact-check my references. But the words all remain my own. I am experimenting even more using GenAI tools to create infographics and other formatting execution. If these go a little awry, please forgive me! I’m learning by using just like everyone else.